The Power and Versatility of the Linux Operating System

In a world dominated by technology, the operating system plays a crucial role in shaping our digital experiences. Among the myriad of operating systems available, Linux stands out as a powerful and versatile option that has gained substantial popularity over the years. With its open-source nature and robust capabilities, Linux has become a go-to choice for tech enthusiasts, developers, and businesses alike.

At its core, Linux is an operating system that originated from the Unix family of operating systems. Developed by Linus Torvalds in 1991, Linux was built on the principles of openness, collaboration, and community-driven development. These foundational values have paved the way for its widespread adoption and continuous improvement.

One of the key strengths of Linux lies in its open-source nature. Unlike proprietary operating systems such as Windows or macOS, Linux is freely available to anyone who wants to use it or contribute to its development. This openness fosters a vibrant community of developers who constantly work to enhance its functionality and security.

Linux’s versatility is another aspect that sets it apart from other operating systems. It can be found running on a wide range of devices, from desktop computers and servers to smartphones, embedded systems, and even supercomputers. Its adaptability makes it an ideal choice for various applications across industries.

One notable feature of Linux is its stability and reliability. Thanks to its modular design and efficient resource management, Linux-based systems are known for their robustness and ability to handle heavy workloads with ease. This makes them particularly suitable for server environments where uptime and performance are critical.

Furthermore, Linux offers extensive customization options that allow users to tailor their computing experience according to their specific needs. With numerous desktop environments available such as GNOME, KDE Plasma, Xfce, or LXDE, users can choose an interface that suits their preferences while enjoying a seamless user experience.

Security is another area where Linux excels. Its open-source nature enables a vast community of developers to review and audit the code, making it easier to identify and fix vulnerabilities promptly. Additionally, Linux benefits from a strong focus on security practices, with frequent updates and patches being released to ensure the safety of users’ data and systems.

For developers, Linux provides a fertile ground for innovation. Its extensive range of development tools, compilers, libraries, and frameworks make it an ideal environment for creating software applications. The availability of powerful command-line tools empowers developers to automate tasks efficiently and streamline their workflows.

Businesses also find value in Linux due to its cost-effectiveness and scalability. With no licensing fees associated with the operating system itself, Linux offers significant savings compared to proprietary alternatives. Moreover, its stability and ability to handle high-demand workloads make it an excellent choice for enterprise-level applications and server deployments.

In conclusion, the Linux operating system has established itself as a force to be reckoned with in the tech world. Its open-source nature, versatility, stability, security features, customization options, and developer-friendly environment have contributed to its widespread adoption across various industries. Whether you are an enthusiast looking for a reliable desktop experience or a business seeking cost-effective solutions for your infrastructure needs, Linux offers a compelling alternative that continues to shape the future of computing.

9 Tips for Maximizing Your Linux Operating System

- Use the command line to quickly and efficiently perform tasks.

- Keep your system up to date by regularly running ‘sudo apt-get update’ and ‘sudo apt-get upgrade’.

- Install a firewall to protect your system from malicious software, hackers, and other threats.

- Utilise virtual machines to test new applications before installing them on your main system.

- Learn how to use SSH for secure remote connections between systems or devices.

- Take advantage of version control systems like Git for managing changes in code or configuration files over time

- Make regular backups of important data and store it securely offsite in case of hardware failure or data loss

- Set up automated monitoring tools to detect suspicious activity on your server

- Familiarise yourself with the most popular Linux distributions (such as Ubuntu, Fedora, Debian) so you can choose the right one for your needs

Unlocking Efficiency: Harness the Power of the Linux Command Line

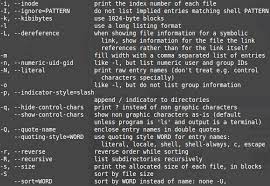

In the vast realm of the Linux operating system, one of its most powerful tools lies within the command line interface. While graphical user interfaces (GUIs) offer user-friendly interactions, mastering the command line can elevate your efficiency and productivity to new heights. By embracing this often-overlooked aspect of Linux, you can swiftly perform tasks and unlock a world of possibilities.

The command line provides direct access to the heart of your Linux system. Instead of navigating through menus and windows, you can execute commands by typing them directly into a terminal. This streamlined approach allows for precise control and rapid execution, making it ideal for both simple tasks and complex operations.

One significant advantage of using the command line is its speed. With just a few keystrokes, you can accomplish tasks that might take several clicks or menu selections in a GUI. Whether it’s installing software packages, managing files, or configuring system settings, executing commands through the terminal offers unparalleled efficiency.

Moreover, the command line empowers you with a vast array of tools and utilities that may not be readily available in GUI-based interfaces. From powerful text-processing tools like grep and sed to network diagnostics using ping and traceroute, the command line provides an extensive toolkit for various purposes. Learning these tools opens up new avenues for problem-solving and automation.

Another benefit is scriptability. By combining multiple commands into scripts or creating shell scripts with conditional statements and loops, you can automate repetitive tasks or complex workflows. This ability to write scripts not only saves time but also ensures consistency in executing tasks across different systems.

Navigating through directories is also more efficient on the command line. With simple commands like cd (change directory) and ls (list files), you can swiftly traverse your file system hierarchy without relying on graphical file managers. Additionally, wildcard characters such as * and ? enable powerful pattern matching when working with files or directories.

The command line also provides direct access to system logs, allowing you to troubleshoot issues and monitor system activity in real-time. Commands like tail, grep, and journalctl enable you to filter and search log files efficiently, providing valuable insights into the inner workings of your Linux system.

While the command line may seem daunting at first, learning a few essential commands can go a long way. Start by familiarising yourself with basic commands such as ls, cd, cp, mv, rm, and mkdir. As you gain confidence, explore more advanced commands and their options. Online resources and tutorials are abundant for those seeking guidance on their command line journey.

In conclusion, embracing the power of the Linux command line can significantly enhance your efficiency when working with this versatile operating system. By mastering a handful of commands and exploring their capabilities further, you can streamline your workflow, automate tasks, and gain deeper insights into your system. So why not take a leap into the world of terminal-based productivity? Unleash the potential of the command line and unlock a new level of efficiency in your Linux experience.

Keep your system up to date by regularly running ‘sudo apt-get update’ and ‘sudo apt-get upgrade’.

The Importance of Keeping Your Linux System Up to Date

When it comes to maintaining a healthy and secure Linux operating system, regular updates play a crucial role. Updating your system ensures that you have the latest bug fixes, security patches, and new features, helping to enhance performance and protect against potential vulnerabilities. One simple tip to keep your Linux system up to date is by regularly running the commands ‘sudo apt-get update’ and ‘sudo apt-get upgrade’.

The ‘sudo apt-get update’ command is used to refresh the package lists on your system. It retrieves information about available updates from the software repositories configured on your machine. By running this command, you ensure that your system has the most current information about software packages and their versions.

After updating the package lists, running ‘sudo apt-get upgrade’ allows you to install any available updates for your installed packages. This command will download and install the latest versions of packages that have been updated since your last update.

Regularly running these commands is essential for several reasons. Firstly, it keeps your system secure by patching any known vulnerabilities in software packages. Developers actively work on identifying and addressing security flaws, so staying up to date helps safeguard your system against potential threats.

Secondly, software updates often include bug fixes and performance improvements. By keeping your system updated, you ensure that you have access to these enhancements, which can lead to a smoother user experience and improved stability.

Furthermore, running regular updates helps maintain compatibility with other software components on your system. As new features or changes are introduced in different packages, it is important to keep all components in sync to avoid conflicts or compatibility issues.

It is worth noting that while updating packages is generally beneficial, it’s always a good idea to review the changes introduced by each update before proceeding with installation. Occasionally, an update may introduce changes that could affect specific configurations or dependencies in your setup. By reviewing update details beforehand, you can make informed decisions and take any necessary precautions to ensure a smooth update process.

In conclusion, keeping your Linux system up to date is crucial for maintaining security, performance, and compatibility. By regularly running ‘sudo apt-get update’ and ‘sudo apt-get upgrade’, you can ensure that your system is equipped with the latest bug fixes, security patches, and enhancements. Make it a habit to check for updates frequently and stay proactive in keeping your Linux system healthy and secure.

Install a firewall to protect your system from malicious software, hackers, and other threats.

Enhance Your Linux Security: Install a Firewall for Ultimate Protection

When it comes to safeguarding your Linux system, one of the most crucial steps you can take is to install a firewall. Acting as a virtual barrier, a firewall acts as the first line of defense against malicious software, hackers, and other potential threats lurking on the internet. By implementing this essential security measure, you can significantly fortify your system’s resilience and protect your valuable data.

A firewall serves as a gatekeeper that carefully monitors incoming and outgoing network traffic. It acts as a filter, analyzing data packets and determining whether they should be allowed to pass through or if they pose a potential risk. By setting up rules and configurations, you can define which connections are permitted and which should be blocked, effectively creating an additional layer of protection.

Installing a firewall on your Linux operating system is relatively straightforward. There are several options available, with some distributions even including built-in firewall solutions. One popular choice is iptables, a command-line utility that allows you to configure advanced network filtering rules. While iptables may require some technical knowledge to set up initially, it offers extensive customization options for fine-tuning your system’s security.

For those seeking user-friendly alternatives, graphical firewall management tools such as UFW (Uncomplicated Firewall) or GUFW (Graphical Uncomplicated Firewall) provide intuitive interfaces that simplify the configuration process. These tools enable users to manage their firewall settings with ease by employing simple point-and-click actions.

Once your firewall is installed and configured, it will diligently monitor all incoming and outgoing network traffic based on the predefined rules you’ve established. Suspicious or unauthorized connections will be blocked automatically, preventing potential threats from infiltrating your system.

By installing a firewall on your Linux system, you gain several significant advantages in terms of security:

- Protection against malicious software: A firewall acts as an effective shield against malware attempting to exploit vulnerabilities in your system. It monitors incoming connections and blocks any attempts from malicious entities trying to gain unauthorized access.

- Defense against hackers: Hackers are constantly probing networks for vulnerabilities. A firewall helps thwart their efforts by controlling access to your system, making it significantly more challenging for them to breach your defences.

- Prevention of data breaches: With a firewall in place, you can regulate outbound connections as well. This ensures that sensitive information remains within your network and prevents unauthorized transmission of data.

- Peace of mind: Knowing that your Linux system is fortified with a firewall provides peace of mind, allowing you to focus on your tasks without worrying about potential security threats.

Remember, while a firewall is an essential security measure, it should not be considered the sole solution for protecting your Linux system. Regularly updating your software, employing strong passwords, and practicing safe browsing habits are equally important measures in maintaining a secure computing environment.

By installing a firewall on your Linux operating system, you take a proactive step towards fortifying your digital fortress against potential threats. With enhanced protection against malicious software, hackers, and data breaches, you can enjoy the benefits of a secure and reliable computing experience while keeping your valuable information safe from harm.

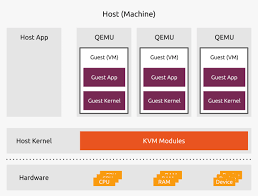

Utilise virtual machines to test new applications before installing them on your main system.

Enhance Your Linux Experience: Utilize Virtual Machines for Application Testing

When it comes to exploring new applications on your Linux operating system, it’s always wise to exercise caution. You may be hesitant to install unfamiliar software directly on your main system, as it could potentially disrupt its stability or compromise its security. Thankfully, Linux offers a powerful solution: virtual machines.

Virtual machines (VMs) allow you to create isolated and self-contained environments within your existing operating system. By utilizing VMs, you can test new applications and software without risking any adverse effects on your primary setup. This invaluable tool provides a safe playground for experimentation and evaluation.

Setting up a virtual machine is relatively straightforward. There are several popular virtualization platforms available for Linux, such as VirtualBox, VMware Workstation, or KVM (Kernel-based Virtual Machine). These tools enable you to create virtual instances of different operating systems within your main Linux environment.

Once you’ve set up a virtual machine, you can install the application you wish to test on it. This allows you to evaluate its functionality, performance, and compatibility without affecting your primary system. If the application doesn’t meet your expectations or causes any issues within the virtual environment, you can simply delete the VM and start afresh.

Using virtual machines for application testing offers several advantages. Firstly, it provides an extra layer of security by isolating the software from your main system. If the application contains malware or has unintended consequences, it won’t impact your primary setup or compromise your data.

Secondly, VMs enable you to test software across different operating systems without having to set up separate physical machines. This flexibility allows developers and users alike to verify cross-platform compatibility effortlessly.

Moreover, utilizing virtual machines saves time by avoiding potential conflicts between applications installed on your main system. It eliminates the need for uninstalling unwanted software or dealing with complex dependency issues that may arise from installing unfamiliar applications directly onto your primary setup.

Additionally, virtual machines provide a reliable and reproducible testing environment. You can take snapshots of the VM at different stages of testing or before making significant changes. If something goes wrong, you can easily revert to a previous snapshot, saving you from potential headaches and troubleshooting efforts.

Virtual machines are not only useful for testing applications but also for experimenting with new configurations, trying out different Linux distributions, or even learning about other operating systems. The possibilities are endless and limited only by your imagination.

So, next time you come across an intriguing application that you’d like to try on your Linux system, consider utilizing virtual machines as your testing ground. By doing so, you can explore new software with confidence while preserving the stability and security of your primary setup. Embrace the power of virtualization and unlock a world of experimentation within your Linux environment.

Learn how to use SSH for secure remote connections between systems or devices.

Enhance Your Linux Experience: Secure Remote Connections with SSH

In the vast realm of the Linux operating system, there are countless features and tools that can elevate your computing experience. One such tool that stands out is SSH (Secure Shell), a powerful utility that enables secure remote connections between systems or devices. Whether you’re a seasoned Linux user or just starting out, learning how to use SSH can greatly enhance your ability to access and manage remote machines securely.

SSH is a protocol that allows you to establish encrypted connections between computers over an unsecured network, such as the internet. It provides a secure channel through which you can remotely access and control another machine, execute commands, transfer files, or even forward network traffic. This makes it an invaluable tool for system administrators, developers, and anyone who needs to manage multiple machines from a central location.

One of the key advantages of using SSH is its robust security measures. By encrypting all data transmitted between client and server, SSH ensures that sensitive information remains confidential and protected from potential eavesdroppers. This is particularly crucial when accessing remote machines over public networks where security risks are higher.

To get started with SSH on your Linux system, you’ll need two components: an SSH client and an SSH server. Most Linux distributions come with these components pre-installed, but if not, they can easily be installed via package managers like apt or yum.

Once you have both client and server components set up, using SSH becomes straightforward. To initiate a connection from your local machine to a remote one, simply open your terminal and enter the following command:

“`

ssh username@remote_IP_address

“`

Replace “username” with the appropriate username for the remote machine and “remote_IP_address” with its actual IP address or hostname. Upon entering this command, you’ll be prompted to enter the password associated with the specified username on the remote machine. Once authenticated successfully, you’ll gain access to the remote machine’s command-line interface, allowing you to execute commands as if you were physically present.

SSH also supports key-based authentication, which offers an even higher level of security and convenience. Instead of relying on passwords, you can generate a public-private key pair on your local machine and copy the public key to the remote machine. This way, when you attempt to connect via SSH, the remote machine will authenticate you based on your private key. This method eliminates the need for entering passwords each time and provides a more secure means of authentication.

In addition to secure remote shell access, SSH also enables file transfers between systems using tools like SCP (Secure Copy) or SFTP (SSH File Transfer Protocol). These utilities allow you to securely transfer files between your local machine and remote servers or vice versa.

In conclusion, learning how to use SSH for secure remote connections is an invaluable skill that can greatly enhance your Linux experience. By providing encrypted communication channels and robust authentication methods, SSH ensures that your interactions with remote machines remain confidential and secure. Whether you’re managing servers, developing applications, or simply accessing files on different devices, SSH empowers you with a reliable and protected means of connecting across networks.

Take advantage of version control systems like Git for managing changes in code or configuration files over time

Unlocking the Power of Version Control Systems: Git and Linux

In the fast-paced world of software development, managing changes in code or configuration files is essential. Keeping track of modifications, collaborating with team members, and reverting to previous versions can be a daunting task without the right tools. That’s where version control systems like Git come into play, revolutionizing the way we handle code and configuration management on Linux.

Git, a distributed version control system, has gained immense popularity among developers worldwide. Originally created by Linus Torvalds (the same visionary behind Linux), Git offers a seamless solution for tracking changes in files over time. Whether you’re working on a small personal project or collaborating with a large team, Git provides an efficient and reliable framework for managing your codebase.

One of the key advantages of using Git is its decentralized nature. Each developer has their own local copy of the repository, allowing them to work offline and make changes independently. This autonomy eliminates potential conflicts that can arise when multiple people are working on the same files simultaneously.

Git’s branching and merging capabilities are invaluable when it comes to collaboration. Branches allow developers to create separate lines of development for specific features or fixes without affecting the main codebase. Once changes are tested and deemed ready, they can be merged back into the main branch effortlessly. This streamlined workflow promotes efficient teamwork while maintaining code integrity.

Another significant benefit of using Git is its ability to track changes at a granular level. Every modification made to files is recorded as a commit, complete with details such as who made the change and when it occurred. This comprehensive history enables developers to understand why certain decisions were made and provides an audit trail for future reference.

Git also empowers developers to experiment freely without fear of losing work or introducing irreversible errors. By creating branches for experimentation or bug fixes, developers can test ideas without impacting the stable parts of their codebase. If things don’t go as planned, it’s easy to discard or revert changes, ensuring the integrity of the project.

Furthermore, Git integrates seamlessly with popular code hosting platforms like GitHub and GitLab. These platforms provide a centralized location for storing and sharing repositories, making collaboration and code review a breeze. Team members can review each other’s work, suggest improvements, and track progress efficiently.

While Git is commonly associated with software development, its benefits extend beyond coding. Configuration files play a vital role in Linux systems, governing various aspects of their behavior. By leveraging Git for configuration management, system administrators can track changes made to critical files such as network configurations or system settings. This approach ensures that any modifications are properly documented and can be easily rolled back if needed.

In conclusion, version control systems like Git have revolutionized the way we manage changes in code and configuration files on Linux. The decentralized nature of Git promotes collaboration without conflicts, while its branching and merging capabilities streamline teamwork. With granular change tracking and integration with popular hosting platforms, Git provides an efficient framework for developers to work together seamlessly. So why not take advantage of this powerful tool? Embrace Git and unlock a world of possibilities in managing your codebase or configuration files with ease on Linux.

Make regular backups of important data and store it securely offsite in case of hardware failure or data loss

Protect Your Data: The Importance of Regular Backups in the Linux Operating System

In the fast-paced digital age, our data is more valuable than ever. From cherished memories to critical work files, losing important data can be devastating. That’s why it’s crucial to make regular backups of your important data in the Linux operating system and store them securely offsite. This simple tip can save you from the heartache and frustration of hardware failure or unexpected data loss.

The Linux operating system provides a robust and reliable platform for your computing needs. However, no system is immune to hardware failures or unforeseen events that can lead to data loss. Whether it’s a hard drive crash, accidental deletion, or a malware attack, having a backup strategy in place ensures that your valuable information remains safe and recoverable.

Creating regular backups should be an integral part of your Linux routine. Fortunately, Linux offers various tools and methods to facilitate this process. One popular option is using the command-line tool “rsync,” which allows you to synchronize files and directories between different locations. Another widely used tool is “tar,” which creates compressed archives of files and directories for easy storage and retrieval.

When deciding what data to back up, start by identifying your most critical files and folders. These may include personal documents, photos, videos, important emails, or any other irreplaceable data. Additionally, consider backing up configuration files specific to your system setup or any customizations you’ve made.

Once you’ve determined what to back up, it’s essential to choose a secure offsite storage solution. Storing backups offsite protects them from physical damage such as fire, theft, or natural disasters that could affect your primary storage location. Cloud storage services like Dropbox, Google Drive, or dedicated backup solutions like Backblaze offer convenient options for securely storing your backups online.

It’s worth noting that encrypting your backups adds an extra layer of security to protect your data from unauthorized access. Linux provides various encryption tools such as GnuPG (GPG) or VeraCrypt, which allow you to encrypt your backup files before storing them offsite. This ensures that even if someone gains access to your backup files, they won’t be able to decipher the content without the encryption key.

To ensure the effectiveness of your backup strategy, it’s important to regularly test the restoration process. Periodically retrieve a sample of your backed-up data and verify that you can successfully restore it onto a separate system. By doing so, you can have peace of mind knowing that your backups are reliable and accessible when needed.

Remember, making regular backups and storing them securely offsite is not just a good practice; it’s an essential part of protecting your valuable data in the Linux operating system. Take control of your data’s destiny by implementing a robust backup strategy today. In the event of hardware failure or unexpected data loss, you’ll be grateful for the foresight and effort put into safeguarding what matters most to you.

Enhancing Security: Automate Monitoring on Your Linux Server

In an increasingly interconnected world, the security of our digital assets and information is of paramount importance. As a Linux server administrator, it is essential to stay vigilant and proactive in safeguarding your server against potential threats. One effective way to bolster your server’s security is by setting up automated monitoring tools to detect suspicious activity.

Automated monitoring tools act as silent guardians, constantly scanning your server for any signs of unauthorized access, unusual behavior, or potential vulnerabilities. By implementing such tools, you can receive real-time alerts and take immediate action when any suspicious activity is detected.

There are various monitoring tools available for Linux servers, each with its own set of features and capabilities. One popular choice is the open-source tool called “Fail2Ban.” Fail2Ban works by analyzing log files and dynamically blocking IP addresses that exhibit malicious behavior, such as repeated failed login attempts or other suspicious activities.

Another powerful monitoring tool is “OSSEC,” which provides intrusion detection capabilities along with log analysis and file integrity checking. OSSEC can be configured to send notifications whenever it detects any deviations from normal system behavior or any signs of a potential security breach.

Setting up these automated monitoring tools on your Linux server involves a few steps. First, you need to install the chosen tool on your system using package managers like APT or YUM. Once installed, you will need to configure the tool according to your specific requirements and define the parameters for what should be considered suspicious activity.

For example, in Fail2Ban, you can customize the number of failed login attempts that trigger an IP ban or specify which log files should be monitored for potential threats. Similarly, in OSSEC, you can configure rulesets to define what types of events should trigger alerts and specify how those alerts should be delivered (e.g., email notifications or integration with a centralized logging system).

Once configured, these monitoring tools will run quietly in the background, continuously analyzing log files and network activity. If any suspicious activity is detected, they will trigger alerts, allowing you to take immediate action and mitigate potential security risks.

Automated monitoring tools not only provide an additional layer of security but also save valuable time and effort for server administrators. Instead of manually reviewing logs and searching for anomalies, these tools do the heavy lifting for you, freeing up your time to focus on other critical tasks.

In conclusion, setting up automated monitoring tools on your Linux server is a proactive step towards enhancing its security. By leveraging these tools’ capabilities to detect suspicious activity in real-time, you can swiftly respond to potential threats and protect your server from unauthorized access or malicious attacks. Invest in the safety of your Linux server today and enjoy peace of mind knowing that you have an automated security system watching over your digital assets.

Familiarise yourself with the most popular Linux distributions (such as Ubuntu, Fedora, Debian) so you can choose the right one for your needs

Choosing the Right Linux Distribution for Your Needs

When venturing into the world of Linux, one of the first decisions you’ll face is selecting a distribution that suits your needs. With a plethora of options available, familiarizing yourself with some of the most popular distributions can help you make an informed choice. Here are a few noteworthy distributions to consider: Ubuntu, Fedora, and Debian.

Ubuntu, known for its user-friendly interface and extensive community support, has gained widespread popularity among both beginners and experienced users. It offers a polished desktop environment and a vast software repository that covers a wide range of applications. Ubuntu’s focus on ease-of-use and stability makes it an excellent choice for those transitioning from other operating systems.

Fedora, backed by Red Hat, is renowned for its commitment to cutting-edge technology and features. It serves as a platform for innovation in the open-source community, making it ideal for developers and tech enthusiasts who want access to the latest software advancements. Fedora provides a balance between stability and new features through regular updates.

Debian, one of the oldest Linux distributions, is known for its rock-solid stability and adherence to free software principles. It boasts a massive software repository supported by an active community of developers who prioritize security and reliability. Debian’s versatility allows it to be used on various hardware architectures, making it suitable for servers as well as desktops.

Exploring these three popular distributions will give you insight into the different philosophies and strengths that exist within the Linux ecosystem. However, keep in mind that this is just scratching the surface; there are numerous other distributions tailored to specific needs or preferences.

Consider factors such as your level of technical expertise, intended use (e.g., desktop computing or server deployment), hardware compatibility, available software packages, and community support when choosing a distribution. Each distribution has its own unique characteristics that may align better with certain requirements.

Fortunately, most Linux distributions offer live versions that allow you to test them out before committing. Take advantage of this feature to experience the look and feel of each distribution firsthand. Additionally, online forums, documentation, and user communities can provide valuable insights and assistance as you navigate your way through the selection process.

Remember, the beauty of Linux lies in its flexibility and choice. While Ubuntu, Fedora, and Debian are popular options, don’t hesitate to explore other distributions that might better suit your specific needs. With a little research and experimentation, you’ll find the perfect Linux distribution that empowers you to make the most of this powerful operating system.