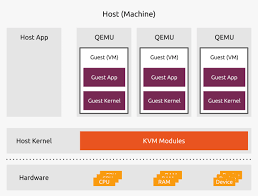

Linux Server Solutions: Empowering Businesses with Stability and Flexibility

In today’s technologically advanced world, businesses of all sizes are increasingly relying on robust server solutions to meet their diverse needs. Among the various options available, Linux server solutions have emerged as a popular choice due to their stability, flexibility, and cost-effectiveness. In this article, we will explore the benefits of Linux server solutions and why they are an ideal choice for businesses looking to optimize their infrastructure.

Stability and Reliability

One of the key advantages of Linux server solutions is their exceptional stability and reliability. Linux operating systems are known for their ability to handle heavy workloads without compromising performance. They are designed to run for extended periods without requiring frequent reboots or experiencing system failures. This stability ensures uninterrupted operations, minimizing downtime and maximizing productivity for businesses.

Flexibility and Customization

Linux offers unparalleled flexibility, allowing businesses to tailor their server environment according to their specific requirements. With a wide range of distributions available, such as Ubuntu, CentOS, and Debian, businesses can choose the one that best suits their needs. Additionally, Linux’s open-source nature enables extensive customization options, providing businesses with the ability to adapt the server software stack precisely to their applications.

Cost-Effectiveness

Linux server solutions offer significant cost advantages over proprietary alternatives. The absence of licensing fees associated with most Linux distributions means that businesses can allocate more resources towards other critical areas. Moreover, Linux’s efficient resource utilization allows servers to handle higher workloads using fewer hardware resources compared to other operating systems. This optimization translates into reduced hardware costs without compromising performance or reliability.

Security

In today’s digital landscape where cyber threats are prevalent, security is a top concern for businesses. Linux has a strong reputation for its robust security features and proactive approach towards addressing vulnerabilities promptly. The open-source nature of Linux facilitates continuous scrutiny by a global community of developers who actively contribute towards identifying and patching security loopholes. This collaborative effort ensures that Linux server solutions remain highly secure, safeguarding sensitive data and protecting businesses from potential threats.

Scalability

As businesses grow, their server requirements evolve. Linux server solutions offer excellent scalability options, allowing businesses to expand their infrastructure seamlessly. Whether it’s adding more storage capacity, increasing processing power, or accommodating additional users, Linux can scale up or down effortlessly to meet changing demands. This scalability ensures that businesses can adapt their server environment as they grow without significant disruptions or costly migrations.

Support and Community

Linux benefits from a vast and dedicated community of users and developers who actively contribute to its growth and development. This vibrant community provides extensive support through forums, documentation, and online resources. Businesses can leverage this wealth of knowledge to troubleshoot issues, seek guidance, and stay updated on the latest advancements in Linux server solutions.

In conclusion, Linux server solutions offer a compelling proposition for businesses seeking stability, flexibility, cost-effectiveness, security, scalability, and robust community support. With its rock-solid performance and extensive customization options, Linux empowers businesses to build a reliable infrastructure that aligns precisely with their unique requirements. Embracing Linux as a server solution can unlock new possibilities for businesses in today’s ever-evolving digital landscape.

7 Essential Tips for Linux Server Solutions in English (UK)

- Use a reliable Linux server distribution such as Ubuntu or CentOS.

- Ensure your server is regularly updated with the latest security patches and software updates.

- Utilize a secure remote access solution such as SSH or SFTP for remote administration of your server.

- Set up automated backups to ensure data integrity in case of system failure or disaster recovery scenarios.

- Implement strong authentication and authorization procedures to protect against unauthorized access to your server environment and data resources.

- Monitor your system performance with tools like sar, top, iostat, etc., so that you can quickly detect any anomalies or potential threats before they become critical issues

- Invest in a good quality firewall solution to protect against malicious attacks and intrusions from external sources

Use a reliable Linux server distribution such as Ubuntu or CentOS.

Unlock the Power of Linux Server Solutions: Choose Ubuntu or CentOS for Reliability

When it comes to Linux server solutions, choosing the right distribution is crucial for a seamless and efficient experience. Two popular options that have gained widespread recognition are Ubuntu and CentOS. These reliable Linux distributions offer a solid foundation for businesses seeking stability, security, and ease of use. In this article, we will explore why selecting a trusted distribution like Ubuntu or CentOS can significantly enhance your Linux server environment.

Ubuntu: The Power of Simplicity

Ubuntu has established itself as one of the most user-friendly Linux distributions available. With its intuitive interface and extensive documentation, Ubuntu makes it easy for businesses to set up and manage their servers efficiently. It offers a vast software repository, ensuring access to a wide range of applications and tools required for various business needs.

What sets Ubuntu apart is its commitment to providing regular updates and long-term support (LTS) releases. LTS versions guarantee five years of security updates and maintenance, giving businesses peace of mind knowing their servers are protected against potential vulnerabilities.

CentOS: Stability at Its Core

CentOS is renowned for its stability, making it an excellent choice for businesses that prioritize reliability above all else. As an enterprise-class distribution derived from the source code of Red Hat Enterprise Linux (RHEL), CentOS offers a robust foundation backed by extensive testing and quality assurance processes.

With its focus on stability rather than frequent updates, CentOS provides a secure environment suitable for critical workloads. It ensures long-term support with regular security patches and bug fixes, allowing businesses to maintain a stable server infrastructure without disruptions.

Security First Approach

Both Ubuntu and CentOS prioritize security as a fundamental aspect of their distributions. Regular security updates are released promptly to address vulnerabilities swiftly. Additionally, both distributions benefit from active communities that contribute to identifying and resolving security issues promptly.

Ubuntu’s Security Team works diligently to ensure timely patches are available whenever new vulnerabilities emerge. Similarly, CentOS benefits from the extensive security expertise of the Red Hat community, ensuring a robust and secure server environment.

Community Support and Documentation

Ubuntu and CentOS have vibrant communities that offer extensive support and resources for users. Online forums, documentation, and user-contributed guides provide valuable insights, troubleshooting assistance, and best practices. The active communities surrounding these distributions foster collaboration and knowledge sharing, enabling businesses to overcome challenges effectively.

Making the Right Choice

When it comes to Linux server solutions, selecting a reliable distribution like Ubuntu or CentOS is a wise decision. Ubuntu’s simplicity and vast software repository make it an excellent choice for businesses seeking an accessible and user-friendly experience. On the other hand, CentOS’s stability and long-term support make it ideal for critical workloads that demand unwavering reliability.

Ultimately, the choice between Ubuntu and CentOS depends on your specific requirements and preferences. Consider factors such as ease of use, desired level of stability, available software packages, and community support when making this decision.

By choosing a trusted Linux distribution like Ubuntu or CentOS for your server solutions, you can unlock the full potential of Linux while ensuring a secure and reliable infrastructure for your business. Embrace the power of these distributions to elevate your server environment to new heights of efficiency and productivity.

Ensure your server is regularly updated with the latest security patches and software updates.

Secure Your Linux Server: The Importance of Regular Updates

When it comes to managing a Linux server, ensuring the security and stability of your system should be a top priority. One crucial tip for maintaining a secure server environment is to regularly update it with the latest security patches and software updates. In this article, we will explore why this practice is essential and how it can safeguard your server from potential vulnerabilities.

Stay One Step Ahead of Threats

The digital landscape is constantly evolving, with new security threats emerging every day. Hackers and malicious actors are always on the lookout for vulnerabilities they can exploit. By keeping your Linux server up to date with the latest security patches, you can stay one step ahead of these threats. Software updates often include bug fixes and vulnerability patches that address known security issues, helping to fortify your server against potential attacks.

Protect Sensitive Data

Servers often house sensitive data, including customer information, financial records, or proprietary business data. A breach in server security could have severe consequences, leading to data leaks or unauthorized access. Regularly updating your server ensures that you are incorporating the latest security measures designed to protect your valuable data. By promptly applying software updates, you minimize the risk of exploitation and help maintain the confidentiality and integrity of your information.

Enhance System Stability and Performance

Software updates not only address security concerns but also improve system stability and performance. Developers continuously work on refining their software by fixing bugs and optimizing performance. By regularly updating your Linux server, you take advantage of these improvements, ensuring that your system runs smoothly and efficiently. This can lead to enhanced productivity for both you and your users while minimizing any disruptions caused by outdated or incompatible software versions.

Safeguard Against Known Vulnerabilities

As new software vulnerabilities are discovered, developers release patches to address them promptly. These vulnerabilities could potentially allow hackers to gain unauthorized access or compromise the integrity of your server. Regularly updating your Linux server ensures that you are applying these patches, effectively closing any known security loopholes. By doing so, you significantly reduce the risk of falling victim to attacks targeting these vulnerabilities.

Simplify Future Upgrades

Regular updates also lay the foundation for future upgrades and migrations. By consistently updating your server, you ensure that your system remains compatible with the latest software versions. This makes future upgrades smoother and less prone to compatibility issues. Neglecting updates for an extended period can result in a backlog of patches and updates, making it more challenging to upgrade or migrate your server when needed.

In conclusion, regularly updating your Linux server with the latest security patches and software updates is vital for maintaining a secure and stable environment. It protects against emerging threats, safeguards sensitive data, enhances system performance, and simplifies future upgrades. By prioritizing regular updates, you demonstrate a proactive approach to server security, ensuring that your Linux server remains resilient against potential vulnerabilities in an ever-evolving digital landscape.

Utilize a secure remote access solution such as SSH or SFTP for remote administration of your server.

Enhancing Security and Efficiency: Secure Remote Access Solutions for Linux Server Administration

In the realm of Linux server solutions, ensuring secure remote access is paramount for efficient administration and safeguarding sensitive data. A tip that can significantly enhance both security and productivity is to utilize a secure remote access solution such as SSH (Secure Shell) or SFTP (Secure File Transfer Protocol) for managing your server remotely. In this article, we will explore the benefits of implementing these protocols and how they contribute to a robust server administration environment.

SSH, the industry-standard protocol for secure remote access, provides a secure channel over an unsecured network, encrypting all data transmitted between the client and the server. By utilizing SSH, administrators can securely connect to their Linux servers from any location, granting them full control over system management tasks without compromising security.

The advantages of using SSH for remote administration are manifold. Firstly, it establishes a secure connection that protects sensitive information such as login credentials and data transfers from potential eavesdropping or interception by malicious entities. This encryption ensures that only authorized users with proper authentication credentials can access the server remotely.

Secondly, SSH offers a range of authentication methods to ensure strong access control. These methods include password-based authentication, key-based authentication using public-private key pairs, or even two-factor authentication for an added layer of security. By implementing these authentication mechanisms, businesses can fortify their remote administration practices against unauthorized access attempts.

Furthermore, SSH provides features like port forwarding and tunneling capabilities that enable administrators to securely access services running on the server’s local network from a remote location. This functionality proves invaluable when managing servers behind firewalls or accessing internal resources securely.

Another secure remote access solution is SFTP (Secure File Transfer Protocol), which allows administrators to transfer files securely between local machines and remote servers. Similar to SSH, SFTP encrypts file transfers to prevent unauthorized interception or tampering during transit.

By leveraging SFTP for file transfers, administrators can securely upload, download, or manage files on the server remotely. This eliminates the need for less secure file transfer methods such as FTP (File Transfer Protocol) and ensures that sensitive data remains protected throughout the transfer process.

In conclusion, utilizing a secure remote access solution like SSH or SFTP for Linux server administration is a best practice that enhances both security and efficiency. These protocols establish encrypted connections, authenticate users securely, and enable seamless remote management of servers while safeguarding sensitive data from potential threats. By implementing these measures, businesses can confidently embrace remote server administration, knowing that their infrastructure is protected by robust security measures.

Set up automated backups to ensure data integrity in case of system failure or disaster recovery scenarios.

Ensuring Data Integrity with Automated Backups: A Crucial Tip for Linux Server Solutions

In the world of Linux server solutions, where businesses rely heavily on their infrastructure to store and process critical data, data integrity is of paramount importance. System failures or unforeseen disasters can potentially result in data loss, leading to severe consequences for businesses. To safeguard against such scenarios, setting up automated backups is a crucial tip that can help maintain data integrity and facilitate efficient disaster recovery.

Automated backups offer several advantages over manual backup processes. By automating the backup process, businesses can ensure that their data is regularly and consistently backed up without relying on human intervention. This eliminates the risk of oversight or forgetfulness that may occur when relying on manual backup procedures.

Implementing automated backups involves configuring backup software or scripts to run at scheduled intervals. Linux offers a wide range of robust backup tools, such as rsync, Bacula, and Amanda, which provide flexible options for creating automated backup routines tailored to specific needs.

When setting up automated backups, it is essential to consider the following best practices:

- Define a Backup Strategy: Determine what data needs to be backed up and establish a comprehensive backup strategy. Identify critical files, databases, configurations, and any other important information that should be included in the backups.

- Choose Backup Locations: Select appropriate storage locations for your backups. It is advisable to use separate physical or cloud storage devices to minimize the risk of data loss due to hardware failures or disasters affecting the primary server.

- Schedule Regular Backups: Set up a regular backup schedule based on your business requirements and the frequency of changes to your data. Daily or incremental backups are common practices that strike a balance between ensuring recent data availability while minimizing storage requirements.

- Test Backup Restorations: Regularly test the restoration process from your backups to ensure they are working correctly and your data can be recovered successfully if needed. This practice helps identify any potential issues or gaps in your backup strategy and allows for timely adjustments.

- Monitor Backup Processes: Implement monitoring mechanisms to ensure that backups are running as scheduled and completing successfully. Automated notifications or alerts can help identify any failures or errors promptly, allowing for timely troubleshooting and resolution.

By setting up automated backups, businesses can significantly reduce the risk of data loss and ensure data integrity in the event of system failures or disaster recovery scenarios. This proactive approach not only protects valuable information but also provides peace of mind, knowing that critical data is securely backed up and readily available for restoration when needed.

In the fast-paced digital landscape, where the importance of data cannot be overstated, implementing automated backups is a fundamental step towards maintaining a robust and resilient Linux server solution. Take the necessary precautions today to safeguard your business’s valuable data and ensure its integrity in any eventuality.

Implement strong authentication and authorization procedures to protect against unauthorized access to your server environment and data resources.

Enhancing Security in Linux Server Solutions: Implementing Strong Authentication and Authorization Procedures

In the realm of Linux server solutions, ensuring robust security measures is paramount to safeguarding your server environment and protecting valuable data resources. One crucial tip to fortify your system against unauthorized access is to implement strong authentication and authorization procedures. By doing so, you can significantly reduce the risk of potential breaches and maintain the integrity of your server infrastructure. In this article, we will delve into the importance of strong authentication and authorization procedures and how they contribute to bolstering security.

Authentication serves as the initial line of defense in preventing unauthorized access to your Linux server. It involves verifying the identity of users attempting to gain entry into the system. Implementing strong authentication mechanisms such as two-factor authentication (2FA) or multi-factor authentication (MFA) adds an extra layer of protection beyond traditional username-password combinations. These methods typically require users to provide additional information or use a secondary device, such as a mobile phone or hardware token, to verify their identity. By requiring multiple factors for authentication, even if one factor is compromised, attackers will find it significantly more challenging to gain unauthorized access.

Furthermore, enforcing stringent password policies is an essential aspect of robust authentication. Encourage users to create complex passwords that include a combination of upper and lowercase letters, numbers, and special characters. Regularly prompt users to update their passwords and avoid reusing them across different accounts or services.

In addition to authentication, implementing effective authorization procedures is crucial for maintaining control over user privileges within your Linux server environment. Authorization ensures that authenticated users have appropriate access rights based on their roles or responsibilities. By assigning granular permissions and limiting access only to necessary resources, you can minimize the risk of unauthorized actions or data breaches.

Implementing Role-Based Access Control (RBAC) is an excellent approach for managing authorization effectively. RBAC allows administrators to define roles with specific permissions and assign them to individual users or groups. This method simplifies access management, reduces the potential for human error, and ensures that users have access only to the resources required for their respective roles.

Regularly reviewing and updating user privileges is equally important. As personnel changes occur within your organization, promptly revoke access for employees who no longer require it. Additionally, conduct periodic audits to identify any discrepancies or potential security vulnerabilities in your authorization framework.

While strong authentication and authorization procedures are essential for securing your Linux server environment, it is vital to complement these measures with other security practices. Regularly patching and updating software, configuring firewalls, implementing intrusion detection systems (IDS), and monitoring system logs are just a few additional steps you can take to enhance overall security.

By implementing robust authentication and authorization procedures in your Linux server solutions, you can significantly reduce the risk of unauthorized access and protect your valuable data resources. Strengthening these fundamental security measures fortifies the foundation of your server infrastructure, ensuring a resilient defence against potential threats. Embracing these practices will enable you to maintain a secure and reliable Linux server environment that instills confidence in both your organization and its stakeholders.

Maximizing System Performance: Monitor Your Linux Server with Essential Tools

In the fast-paced world of technology, maintaining optimal performance and preventing potential issues is crucial for businesses relying on Linux server solutions. To ensure a smooth and efficient operation, it is essential to monitor your system regularly. By leveraging powerful tools like sar, top, iostat, and others, you can detect anomalies or potential threats before they escalate into critical issues.

Sar (System Activity Reporter) is a command-line utility that provides comprehensive system activity reports. It collects data on CPU usage, memory utilization, disk I/O, network traffic, and more. By analyzing sar reports over time, you can identify patterns or irregularities that may impact performance. This valuable insight enables proactive troubleshooting and optimization to maintain a healthy server environment.

Another valuable tool is top, which displays real-time information about system processes and resource usage. With top, you can quickly identify resource-intensive processes that may be causing bottlenecks or slowing down your server. It allows you to prioritize critical tasks or make informed decisions regarding resource allocation.

Iostat provides detailed input/output statistics for devices such as disks and network interfaces. By monitoring disk I/O performance using iostat, you can identify any potential issues affecting read/write speeds or disk latency. This information helps optimize storage configurations and prevent performance degradation due to disk-related problems.

Additionally, tools like vmstat offer insights into virtual memory usage by providing statistics on processes, memory utilization, paging activity, and more. Monitoring virtual memory allows you to identify memory-intensive applications or potential memory leaks early on and take appropriate actions to maintain system stability.

By regularly monitoring your Linux server’s performance using these tools (and others available in the vast Linux ecosystem), you gain visibility into the health of your system. Detecting anomalies or potential threats at an early stage empowers you to address them promptly before they become critical issues impacting productivity or causing downtime.

It is important to establish a monitoring routine that suits your specific needs. Schedule regular checks or set up automated alerts when certain thresholds are exceeded. This proactive approach enables you to stay one step ahead, ensuring that your Linux server operates at its peak performance.

In conclusion, monitoring your Linux server’s performance is essential for maintaining a stable and efficient system. By utilizing powerful tools like sar, top, iostat, and others, you can quickly detect anomalies or potential threats before they escalate into critical issues. Implementing a robust monitoring strategy empowers businesses to optimize resource allocation, troubleshoot problems proactively, and ensure a seamless experience for users relying on the Linux server solution.

Invest in a good quality firewall solution to protect against malicious attacks and intrusions from external sources

Investing in a Reliable Firewall Solution: Safeguarding Linux Server Solutions

In today’s interconnected world, the security of your Linux server solutions is of paramount importance. With the increasing prevalence of cyber threats and malicious attacks, it is crucial to implement robust measures to protect your infrastructure. One essential step towards fortifying your server environment is investing in a good quality firewall solution.

A firewall acts as a critical line of defense, shielding your Linux server from unauthorized access and malicious intrusions originating from external sources. It acts as a barrier between your server and the vast expanse of the internet, monitoring incoming and outgoing network traffic and enforcing security policies.

By implementing a high-quality firewall solution, you can enjoy several benefits that contribute to the overall security and stability of your Linux server. Let’s explore some key advantages:

- Network Protection: A firewall scrutinizes network packets, filtering out potentially harmful or suspicious traffic. It examines data packets based on predefined rules and policies, allowing only legitimate connections while blocking unauthorized access attempts. This proactive approach helps prevent potential threats from compromising your server’s integrity.

- Intrusion Detection and Prevention: A robust firewall solution includes intrusion detection and prevention mechanisms that identify suspicious patterns or behaviors in network traffic. It can detect various types of attacks, such as port scanning, denial-of-service (DoS), or distributed denial-of-service (DDoS) attacks. By promptly identifying these threats, the firewall can take necessary actions to mitigate potential risks and safeguard your server.

- Application-Level Security: Some advanced firewalls provide application-level inspection capabilities, allowing them to analyze specific protocols or applications for potential vulnerabilities or anomalies. This additional layer of scrutiny helps protect against targeted attacks aimed at exploiting weaknesses in specific applications running on your Linux server.

- Access Control: Firewalls enable you to define granular access control policies for inbound and outbound connections. You can specify which IP addresses or ranges are allowed to communicate with your server, restricting access to only trusted sources. This level of control significantly reduces the attack surface and minimizes the risk of unauthorized access.

- Logging and Monitoring: A good firewall solution provides comprehensive logging and monitoring capabilities, allowing you to track and analyze network traffic patterns, attempted intrusions, or suspicious activities. These logs can serve as valuable resources for forensic analysis or proactive threat detection, helping you stay one step ahead of potential security breaches.

Investing in a reliable firewall solution is an essential step towards enhancing the security posture of your Linux server solutions. It acts as a vital safeguard against external threats and provides peace of mind by ensuring that your infrastructure remains protected.

Remember that no security measure is foolproof, and it is crucial to keep your firewall solution up to date with regular patches and updates. Additionally, consider implementing other security best practices such as strong authentication mechanisms, regular backups, and proactive monitoring to create a holistic security approach for your Linux server environment.

By prioritizing the implementation of a robust firewall solution, you are taking a proactive stance in protecting your Linux server solutions from malicious attacks and intrusions. Safeguarding your infrastructure not only ensures business continuity but also instills trust among users who rely on your services.