Linux Ubuntu: Power and Simplicity Combined

Linux Ubuntu, often referred to simply as Ubuntu, is a popular open-source operating system that has gained a strong following since its initial release in 2004. Developed by Canonical Ltd., Ubuntu has become known for its seamless blend of power, versatility, and user-friendly interface. In this article, we will explore the key features and benefits of Linux Ubuntu that have made it a go-to choice for individuals and organizations alike.

One of the standout features of Linux Ubuntu is its commitment to simplicity. From installation to everyday use, the developers have prioritized creating an intuitive experience for users. The installation process is straightforward, with a user-friendly interface guiding you through the necessary steps. Whether you are a seasoned Linux user or a complete beginner, you can get up and running with Ubuntu quickly and easily.

Ubuntu’s desktop environment offers a clean and modern design that is both visually appealing and functional. The default desktop environment, called GNOME, provides an intuitive interface that allows users to navigate effortlessly through their applications and files. Additionally, Ubuntu offers different flavors with alternative desktop environments such as KDE Plasma or Xfce to cater to individual preferences.

Another notable aspect of Ubuntu is its vast software repository. With over 50,000 packages available through the official repositories, users have access to a wide range of free and open-source software applications for various purposes. From productivity tools like LibreOffice to multimedia software like VLC Media Player, you can find everything you need without having to scour the internet.

Ubuntu’s commitment to security is also commendable. Regular updates are released to address vulnerabilities promptly and ensure that your system remains secure. The community-driven approach means that issues are identified quickly and resolved efficiently by both Canonical’s developers and the wider Linux community.

For developers and programmers, Ubuntu provides an ideal platform with extensive support for different programming languages such as Python, C++, Java, and more. The inclusion of tools like the GNU Compiler Collection (GCC) and the powerful terminal emulator, along with access to various libraries and frameworks, makes Ubuntu an excellent choice for software development.

Moreover, Ubuntu’s compatibility with a wide range of hardware ensures that it can be installed on most desktops, laptops, and servers. Whether you are using older machines or the latest hardware, Ubuntu offers excellent performance and stability.

Ubuntu’s community is also worth mentioning. The Ubuntu community is known for its inclusivity and helpfulness. Online forums, discussion boards, and chat channels provide a platform for users to seek assistance, share knowledge, and collaborate on projects. This strong community support ensures that users can find answers to their questions or troubleshoot any issues they may encounter.

In conclusion, Linux Ubuntu is a powerful yet user-friendly operating system that continues to gain popularity among individuals and organizations worldwide. With its commitment to simplicity, extensive software repository, security updates, developer-friendly environment, hardware compatibility, and supportive community, Ubuntu offers a compelling package for anyone seeking a reliable open-source operating system.

Whether you are a student looking for an alternative to proprietary software or an organization in search of a cost-effective solution for your IT infrastructure, Linux Ubuntu has proven itself as a trustworthy choice. Embrace the power of open-source technology with Ubuntu and unlock a world of possibilities for your computing needs.

7 Essential Tips for Securing and Maintaining Your Ubuntu System

- Always keep your Ubuntu system up to date with the latest security patches and software updates.

- Make sure you have a good antivirus installed and that it is regularly updated.

- Use strong passwords for all your accounts, especially those related to root access or sudo privileges.

- Be aware of the risks associated with using sudo or root privileges when running commands on your system – only use them when absolutely necessary!

- Install any additional software from trusted sources, such as the official Ubuntu repositories or PPAs (Personal Package Archives).

- Regularly back up important data in case of system failure or other unforeseen events.

- Familiarise yourself with the basics of Linux so you can troubleshoot any issues that may arise while using Ubuntu

Always keep your Ubuntu system up to date with the latest security patches and software updates.

Secure Your Ubuntu System: Stay Up to Date

When it comes to keeping your Ubuntu system secure, one of the most crucial practices is to ensure you are always up to date with the latest security patches and software updates. Regularly updating your system not only enhances its performance but also safeguards it against potential vulnerabilities and threats.

Ubuntu, being an open-source operating system, benefits from a vast community of developers and contributors who actively work to identify and address security issues promptly. Updates are released regularly to patch vulnerabilities and improve the overall stability of the system.

To keep your Ubuntu system up to date, follow these simple steps:

- Enable Automatic Updates: Ubuntu offers a convenient feature that allows you to enable automatic updates. This ensures that critical security patches and software updates are installed without any manual intervention. To enable this feature, go to “Software & Updates” in the System Settings menu and select the “Updates” tab. From there, choose your preferred update settings.

- Use the Update Manager: Ubuntu provides an intuitive Update Manager tool that makes it easy to check for available updates manually. Simply open the Update Manager from the applications menu or use the command “sudo apt update” in the terminal. The Update Manager will display a list of available updates for your system.

- Install Available Updates: Once you have identified available updates through either automatic updates or the Update Manager, it is essential to install them promptly. Security patches often address vulnerabilities that could be exploited by malicious actors if left unpatched. Installing updates helps ensure that your system remains protected against emerging threats.

- Restart When Required: Some software updates may require a restart for changes to take effect fully. It is important not to overlook this step as certain critical components may not function optimally until a restart has been performed.

By following these steps and maintaining an up-to-date Ubuntu system, you can significantly reduce potential security risks and enjoy a more secure computing experience. Regular updates not only protect your system from known vulnerabilities but also improve its performance and stability.

Remember, security is an ongoing process, and staying vigilant with updates is a fundamental aspect of maintaining a secure Ubuntu system. Make it a habit to check for updates regularly or enable automatic updates to ensure that your Ubuntu system is always fortified against potential threats.

Take control of your Ubuntu system’s security today by embracing the power of regular updates. Safeguard your data, protect your privacy, and enjoy a worry-free computing experience with an up-to-date Ubuntu system.

Make sure you have a good antivirus installed and that it is regularly updated.

Protecting Your Linux Ubuntu System: The Importance of Antivirus

When it comes to computer security, many users tend to overlook the need for antivirus software on their Linux Ubuntu systems. However, it is crucial to understand that even though Linux is generally considered more secure than other operating systems, it is not immune to threats. In this article, we will emphasize the importance of having a good antivirus installed on your Linux Ubuntu system and ensuring that it is regularly updated.

Contrary to popular belief, viruses and malware do exist in the Linux ecosystem. While the number of threats may be relatively lower compared to other platforms, they can still pose a significant risk if left unchecked. Cybercriminals are continuously evolving their tactics and targeting vulnerabilities in all operating systems, including Linux.

Installing a reliable antivirus solution on your Linux Ubuntu system provides an additional layer of protection against potential threats. It helps detect and remove any malicious software that may have made its way onto your system. Additionally, antivirus software can prevent the spread of malware by scanning files and applications before they are executed.

Regularly updating your antivirus software is crucial in maintaining its effectiveness. New threats emerge regularly, and antivirus developers constantly release updates to address these vulnerabilities. By keeping your antivirus up to date, you ensure that it can identify and neutralize the latest threats effectively.

Choosing a suitable antivirus solution for your Linux Ubuntu system can be overwhelming due to the variety of options available. However, several reputable vendors offer robust antivirus software specifically designed for Linux environments. Researching and selecting an antivirus solution that suits your needs is essential.

It’s worth noting that while having an antivirus program installed adds an extra layer of protection, it should not be relied upon as the sole means of securing your system. Practicing good online habits such as avoiding suspicious websites or downloading files from untrusted sources remains crucial in preventing infections.

In conclusion, safeguarding your Linux Ubuntu system with a good antivirus solution is vital in ensuring its security. While Linux is known for its resilience against threats, it is not invincible. By installing reliable antivirus software and keeping it regularly updated, you can enhance your system’s protection and minimize the risk of falling victim to malware or other malicious activities.

Take the necessary steps to protect your Linux Ubuntu system today. Stay vigilant, keep your antivirus up to date, and enjoy a secure computing experience on your favourite open-source operating system.

Protecting Your Ubuntu System: The Power of Strong Passwords

In the digital age, where our personal and sensitive information is increasingly stored and accessed online, the importance of strong passwords cannot be overstated. When it comes to Linux Ubuntu, a robust password policy is crucial for safeguarding your system’s integrity and ensuring that unauthorized access is kept at bay.

One of the fundamental principles of securing your Ubuntu system is using strong passwords for all your accounts, particularly those associated with root access or sudo privileges. These privileged accounts hold significant power over your system and can potentially cause irreparable damage if they fall into the wrong hands. By implementing strong passwords, you create an additional layer of defense against potential security breaches.

So, what exactly constitutes a strong password? Here are some essential guidelines to follow:

- Length: Aim for a minimum password length of 12 characters or more. Longer passwords are generally more secure as they increase the complexity and make it harder for attackers to crack them.

- Complexity: Utilize a combination of uppercase and lowercase letters, numbers, and special characters within your password. This mix adds complexity and makes it more challenging to guess or crack through brute-force attacks.

- Avoid Personal Information: Stay away from using easily guessable information like your name, birthdate, or common words in your password. Attackers often employ automated tools that can quickly guess such obvious choices.

- Unique Passwords: Avoid reusing passwords across multiple accounts or services. If one account gets compromised, having unique passwords ensures that other accounts remain protected.

- Regular Updates: Change your passwords periodically to reduce the risk of long-term vulnerabilities. Consider setting reminders to update them every few months or whenever there’s a potential security incident.

Implementing these practices not only strengthens the security of your Ubuntu system but also extends to other online accounts you may have—email, social media platforms, banking portals—wherever sensitive information is stored.

Ubuntu provides a straightforward way to manage passwords through the user management system and the use of tools like passwd. Additionally, you can consider employing password managers that generate and securely store complex passwords for you, ensuring that you don’t have to remember them all.

Remember, your Ubuntu system’s security is only as strong as the weakest password associated with it. By using strong passwords for all your accounts, especially those tied to root access or sudo privileges, you significantly reduce the risk of unauthorized access and protect your system from potential harm.

Stay vigilant, stay secure—embrace the power of strong passwords and fortify your Ubuntu experience.

Be aware of the risks associated with using sudo or root privileges when running commands on your system – only use them when absolutely necessary!

The Importance of Caution with Sudo or Root Privileges in Linux Ubuntu

When it comes to managing your Linux Ubuntu system, there may be times when you need to run commands with elevated privileges using sudo or as the root user. While these privileges are essential for performing certain tasks, it is crucial to exercise caution and only use them when absolutely necessary. Understanding the risks associated with sudo or root access can help ensure the security and stability of your system.

One of the primary reasons for exercising caution is that running commands with sudo or as root grants you extensive control over your system. With such power comes the potential for unintentional mistakes that could have severe consequences. A simple typo or a misunderstood command can lead to unintended modifications or deletions of critical files, rendering your system unstable or even unusable.

Moreover, running commands with elevated privileges increases the risk of falling victim to malicious software or exploits. When you execute a command as root, you grant it unrestricted access to your system. If a malicious program manages to exploit this access, it can wreak havoc on your files, compromise sensitive data, or even gain control over your entire system.

To mitigate these risks, it is crucial to adopt best practices when using sudo or root privileges. Here are some guidelines to consider:

- Only use sudo or root access when necessary: Before executing a command with elevated privileges, ask yourself if it is truly required. Limiting the use of sudo or root can minimize the chances of accidental errors and reduce exposure to potential threats.

- Double-check commands: Take extra care when entering commands that involve sudo or root access. Verify each character and ensure that you understand what the command will do before proceeding.

- Use specific user accounts: Whenever possible, create separate user accounts with limited privileges for everyday tasks. Reserve sudo or root access for administrative tasks that explicitly require them.

- Keep backups: Regularly back up important files and configurations to an external location. This precaution ensures that even if mistakes occur or your system is compromised, you can restore your data and settings.

- Stay updated: Keep your Linux Ubuntu system up to date with the latest security patches and updates. Regularly check for software vulnerabilities and apply patches promptly to minimize the risk of exploitation.

By following these precautions, you can reduce the potential risks associated with using sudo or root privileges in Linux Ubuntu. Remember, it is always better to err on the side of caution when it comes to system administration. Taking a proactive approach to security and being aware of the potential dangers will help safeguard your system and ensure a smoother computing experience.

Install any additional software from trusted sources, such as the official Ubuntu repositories or PPAs (Personal Package Archives).

Installing Additional Software on Linux Ubuntu: A Tip for Enhanced Security and Reliability

Linux Ubuntu, renowned for its stability and security, offers users a multitude of software options to cater to their diverse needs. While the default installation provides essential applications, there may be instances where you require additional software to expand your system’s capabilities. In such cases, it is crucial to follow a best practice: install any additional software from trusted sources, such as the official Ubuntu repositories or PPAs (Personal Package Archives).

The official Ubuntu repositories serve as a vast collection of pre-packaged software that has been thoroughly tested and verified by the Ubuntu team. These repositories include a wide range of applications spanning various categories like productivity tools, multimedia software, development environments, and more. By installing software from these repositories, you can be confident in their reliability and compatibility with your Ubuntu system.

To access the official Ubuntu repositories, you can use the built-in package management tool called APT (Advanced Package Tool). This command-line utility allows you to search for available packages, install them with ease, and automatically handle any dependencies required by those packages. The APT system ensures that all installed software is properly integrated into your system and receives updates when necessary.

In addition to the official repositories, PPAs (Personal Package Archives) offer another trusted source for obtaining additional software on Ubuntu. PPAs are created by individuals or teams who maintain their own repository of packages that may not be available in the official Ubuntu repositories. These packages often provide newer versions of applications or specialized software tailored to specific user requirements.

When using PPAs, it is essential to exercise caution and choose reputable sources. Stick with well-known developers or teams with a track record of maintaining quality packages. Before adding a PPA to your system, research its credibility and ensure that it aligns with your security standards.

Once you have identified a PPA that meets your criteria, adding it to your system is relatively straightforward. Ubuntu provides a graphical tool called “Software & Updates” that allows you to manage software sources, including PPAs. Alternatively, you can add a PPA using the command line by utilizing the “add-apt-repository” command.

By installing additional software from trusted sources like the official Ubuntu repositories or reputable PPAs, you can enhance the security and reliability of your Ubuntu system. Packages from these sources undergo rigorous testing and are regularly updated to address any vulnerabilities or bugs. This ensures that your system remains secure and stable while benefiting from a wide range of software options.

Remember to keep your system up to date by regularly installing updates provided by Ubuntu. These updates include security patches, bug fixes, and improvements to installed software, further bolstering the overall stability and security of your system.

In conclusion, when expanding the capabilities of your Linux Ubuntu system with additional software, it is crucial to install from trusted sources such as the official Ubuntu repositories or reputable PPAs. By adhering to this best practice, you can ensure that any installed software is reliable, secure, and seamlessly integrated into your Ubuntu environment. Safeguard your system while enjoying an extensive selection of software tailored to meet your needs on Linux Ubuntu.

Regularly back up important data in case of system failure or other unforeseen events.

The Importance of Regularly Backing Up Your Data on Linux Ubuntu

In the digital age, where our lives are increasingly intertwined with technology, it is crucial to protect our valuable data from potential loss. Whether it’s important documents, cherished memories in the form of photos and videos, or critical work files, losing them due to system failure or other unforeseen events can be devastating. That is why regularly backing up your data on Linux Ubuntu is a vital practice that should not be overlooked.

Linux Ubuntu provides several reliable and convenient methods for backing up your data. One popular option is to utilize the built-in backup tool called Déjà Dup. This user-friendly application allows you to schedule automatic backups of your files and directories to an external hard drive, network storage, or even cloud services such as Google Drive or Dropbox.

By setting up regular backups with Déjà Dup, you can ensure that your important data remains safe and accessible even if your system encounters issues. In the event of hardware failure, accidental deletion, or malware attacks, having a recent backup readily available will significantly reduce the impact of these unfortunate circumstances.

Another advantage of regularly backing up your data is the ability to recover previous versions of files. Sometimes we make changes to documents or mistakenly delete something important only to realize later that we need it back. With proper backups in place, you can easily retrieve earlier versions of files or restore deleted items without hassle.

It’s worth noting that while using external storage devices like external hard drives or USB flash drives for backups is a common practice, it’s advisable to keep an offsite backup as well. Storing copies of your data in a different physical location protects against scenarios like theft, fire, or natural disasters that may affect both your computer and its peripherals.

Remember that creating backups should not be a one-time task but rather an ongoing process. As you continue to create new files and modify existing ones, updating your backups regularly will ensure that your most recent data is protected. Setting up automated backups with Déjà Dup or other backup solutions available for Linux Ubuntu can simplify this process and provide peace of mind.

In conclusion, regularly backing up your important data on Linux Ubuntu is a crucial practice to safeguard against system failures, accidental deletions, or other unforeseen events. By utilizing tools like Déjà Dup and adopting a consistent backup routine, you can protect your valuable files, recover previous versions if needed, and mitigate the potential impact of data loss. Take the proactive step today to secure your digital assets by implementing regular backups and enjoy the peace of mind that comes with knowing your data is safe.

Familiarise yourself with the basics of Linux so you can troubleshoot any issues that may arise while using Ubuntu

Familiarize Yourself with Linux Basics: Empower Your Ubuntu Experience

As you embark on your Linux Ubuntu journey, it’s essential to familiarize yourself with the basics of Linux. By doing so, you equip yourself with the knowledge and skills needed to troubleshoot any issues that may arise while using Ubuntu. Understanding the fundamentals of Linux not only enhances your overall experience but also empowers you to take full control of your operating system.

Linux, at its core, is built on a robust and secure foundation. It operates differently from traditional operating systems like Windows or macOS, which may require a slight adjustment in approach. By dedicating some time to learning the basics, you can navigate through Ubuntu’s powerful features more effectively and resolve any potential problems that may occur.

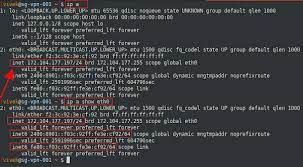

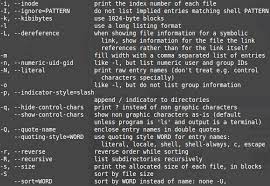

One of the first steps in familiarizing yourself with Linux is getting acquainted with the command line interface (CLI). While Ubuntu provides a user-friendly graphical interface, knowing how to use the CLI can greatly enhance your troubleshooting capabilities. The command line allows for precise control over your system, enabling you to perform advanced tasks and access additional tools not readily available through graphical interfaces.

Learning basic commands such as navigating directories, creating files and folders, managing permissions, and installing software via the package manager will prove invaluable in troubleshooting various issues. Additionally, understanding how to read log files can provide crucial insights into system errors or application crashes.

Another fundamental aspect of Linux is its file system structure. Unlike other operating systems that may have different drive letters or partitions for various purposes, Linux organizes everything under a single root directory. Familiarizing yourself with this hierarchical structure will help you locate files and directories efficiently and troubleshoot issues related to file permissions or disk space.

Moreover, exploring the concept of users and groups in Linux is essential for maintaining security and managing access rights effectively. Understanding how permissions work at different levels (read, write, execute) empowers you to troubleshoot permission-related errors or configure access for different users or applications.

The Linux community is vast and supportive, with numerous resources available to help you learn and troubleshoot any issues you encounter. Online forums, documentation, and tutorials provide valuable insights and solutions shared by experienced Linux users. By actively engaging with the community, you can expand your knowledge and seek guidance when facing challenges.

Remember, troubleshooting is a continuous learning process. As you encounter various issues while using Ubuntu, don’t be discouraged. Instead, embrace them as opportunities to expand your understanding of Linux and refine your troubleshooting skills. With time and practice, you’ll become more proficient in resolving problems efficiently.

In conclusion, familiarizing yourself with the basics of Linux is a crucial step towards becoming a confident Ubuntu user. By understanding the command line interface, file system structure, permissions, and engaging with the Linux community, you empower yourself to troubleshoot any issues that may arise. Embrace this learning journey and unlock the full potential of Ubuntu while gaining valuable skills applicable to the broader world of Linux.